Adding robots.txt file to your StoreFront

The file robots.txt is an industry standard that search engines use to find out what aspects of a site they are allowed to interact with any areas that they should avoid. This tutorial will explain how to create a custom robots.txt file to instruct the search engine crawlers to behave differently.

First let's look at the default robots.txt file that UltraCart serves up if nothing is configured on your StoreFront.

# Hello Robots and Crawlers! We're glad you are here, but we would # prefer you not create hundreds and hundreds of carts. User-agent: * Disallow: /cgi-bin/UCEditor Disallow: /cgi-bin/UCSearch Disallow: /merchant/signup/signup2Save.do Disallow: /merchant/signup/signupSave.do # Sitemap files Sitemap: http://fna00.ultracartstore.com/sitemapsdotorg_index.xml

You'll notice that UltraCart automatically calculates the URL where the crawler can fetch the sitemap file as well. This helps with making sure crawlers are properly indexing your entire site.

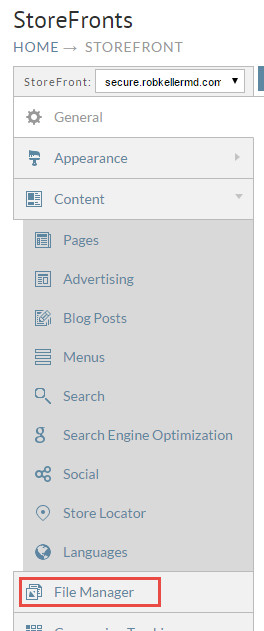

To create a custom robots.txt file navigate to the file manager on your StoreFront:

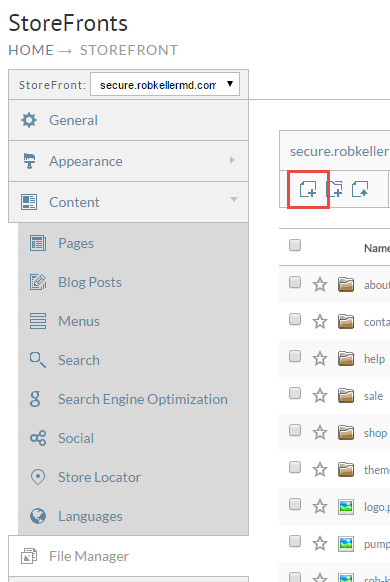

Next click the new file button as shown below.

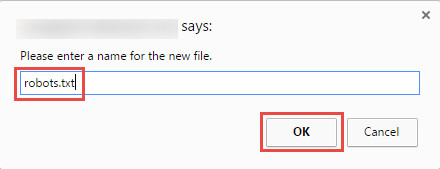

When the dialog pops up, enter "robots.txt" and click on "OK".

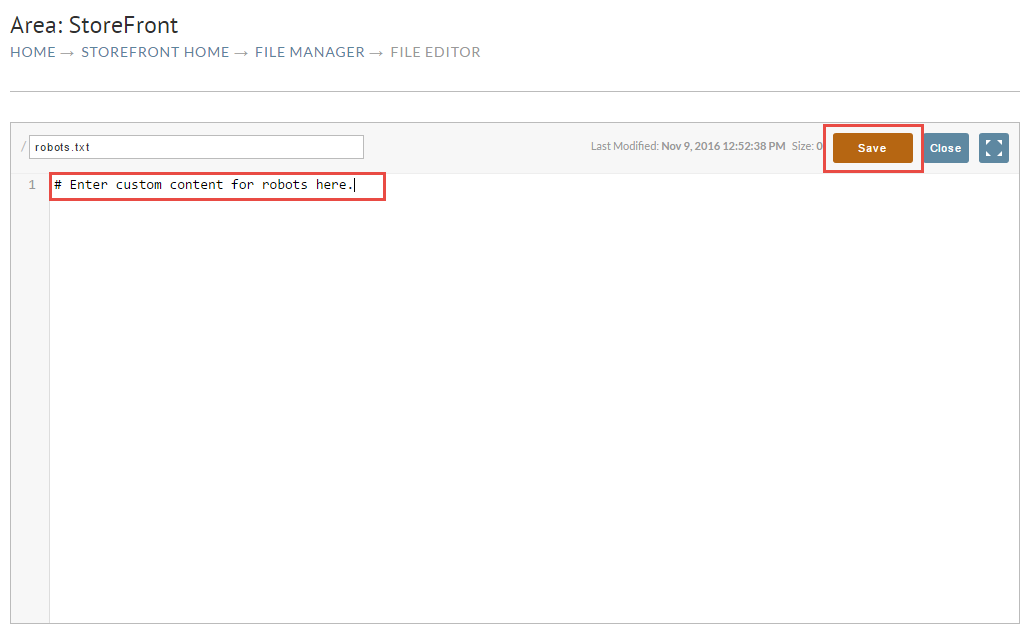

Enter the custom "robots.txt" file content and click OK.