Introduction

UltraCart’s Data Warehouse (BigQuery) feature allows for a business to quickly setup a data warehouse of their customers, items, orders and auto order information with Google’s BigQuery database service. UltraCart will handle the data transfer into BigQuery on an automated base. Businesses can then use the information to feed support systems, write reports, and join up with other data systems for operational analysis.

Additional Benefits:

easy reporting in Google Data Studio / Microsoft Power BI

connect data to Microsoft Excel or Google Sheets

extract querying using the BigQuery CLI or SDK to extract the data set

Providing Users Access to the Data Warehouse

ATTENTION:

The OWNER USER is the only user that can grant access to the Data Warehouse (BigQuery) integration.

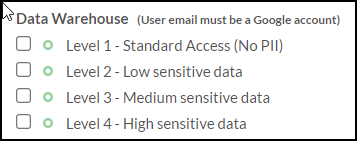

In order for a user to access your data warehouse, the owner of the UltraCart account must grant the individual user permissions. The email associated with the user must be a Google account email. User management is done under Configuration → Account & Users → Users. The permissions that can be granted to the user are:

We recommend that you grant the minimum level of data access that is absolutely necessary for the individual user.

Google Project Location

For the location of your Data Warehouse (BigQuery) project in the Google Cloud navigate to:

Navigate to Configuration → Developer → Data Warehouse (BigQuery)

This page will provide you with the project URL, data set descriptions, etc.

Data Security

UltraCart utilizes Google Cloud IAM to limit access to the BigQuery project to only those users on the account that have been granted access to it. UltraCart also utilizes BigQuery column level security to restrict access to certain columns within various tables to specific levels of users. Whenever data is restricted, a SHA-256 hash of the value will be available in another column to be used in queries instead of the underlying PII data. Having this fine grained data access allows a business owner to provide limited access to the valuable corporate data without exposing customer lists, etc. We encourage owners to only grant the lowest level of access possible to achieve the necessary business result.

Custom Reports

If your business does not have the technical knowledge to work with SQL or report builder tools, UltraCart Professional Services is available to write custom reports and publish them into your UltraCart account. Please contact support@ultracart.com with your report inquiries.

Google BigQuery tutorials

To learn more about using Google BigQuery, please see the following:

FAQ

What is a data warehouse?

A data warehouse is an enterprise grade system that is used for reporting and data analysis and is a core component of business intelligence. Data warehouses are the central repositories where data from one or more different sources are brought together for analysis. Often times these data stores contain both current and historical copies of data.

What is a BigQuery?

BigQuery is an enterprise data warehouse that solves the problem of operating the data warehouse by enabling super-fast SQL queries using the processing power of Google's infrastructure. Simply move your data into BigQuery and let Google handle the hard work. You can control access to both the project and your data based on your business needs, such as giving others the ability to view or query your data.

How does this data warehouse work?

UltraCart tracks what changes are happening to your data and streams updates into different tables within BigQuery using secure communication with your Google cloud project.

What UltraCart plans support it?

All pricing plans have access to the data warehouse.

How often does data update?

The updates stream into the data warehouse with a 1-2 minute delay.

What data sets are supported?

At this time the objects that are pushed into your data warehouse are:

affiliates

affiliate clicks

affiliate ledger

auto orders

customers

gift certificates

items

orders

rotating transaction gateway history

screen recordings

storefront customers

storefront experiments

storefront upsell offers

storefront upsell offer events

storefront upsell paths

storefronts

towerdata email intelligence

What is the data model?

Each record within the BigQuery table is a hierarchy object that is provided to BigQuery as JSON data. It is almost identical to the REST API object model with a few difference covered below.

How does the data model different from the REST API?

All of the date time values on the records stored in BigQuery are in UTC. This differs from the typical EST that UltraCart runs in. The other change is that String[] type values have to be further nested to accommodate the way BigQuery loads data in.

What tools can connect to BigQuery?

There are a large variety of tools that can talk to BigQuery including:

SDKs in almost every popular language

Google Sheets

Google Data Studio

Microsoft PowerBI

Tableau

Qlik

Looker

Almost every popular analysis tool out there will have a connection to BigQuery.

What query language does BigQuery support?

BigQuery works with SQL as it’s query language. Documentation on their syntax is located here.

How does this data format differ from a traditional SQL database?

Traditional SQL databases do not operate on nested data within each row of the database. BigQuery fully supports nested hierarchical data within the table schema. If you’re used to SQL, but new to BigQuery make sure to look at the UNNEST function.

What does this feature cost?

UltraCart costs the first $1.00 of expense associated with the BigQuery project hosting the account data. That is enough to cover all of the data storage expense and bandwidth associated with the updates that occur over time. The actual cost of BigQuery depends solely on the amount of queries that you execute against the environment. For most merchants they will only spend a few dollars a month on their data warehouse environment. UltraCart will once a month apply the previous month’s data warehouse usage to your bill.

The full pricing for BigQuery is located here. BigQuery is basically:

$0.02/GB of storage with the first 10GB free.

$5.00/TB of data processed during queries.

There are no hourly fees for having a BigQuery data set which makes it incredibly economical for more businesses.

What use cases can a business solve with a data warehouse?

Build complex custom reports based upon your order data.

Aggregation of order data from linked accounts into a single database table.

Scheduled reports (using Google Data Studio)

Company dashboards (using Google Data Studio)

and more…

Are linked accounts supported?

Yes, the parent account will contain separate data sets which encompass all the data across all the linked accounts.

Can I join data within the UltraCart BigQuery project with another BigQuery project?

As long as your user has permission to the data within both projects, you can write a query that references the UltraCart hosted tables along side of your own project’s data. This authentication schema and capability is what makes BigQuery incredibly powerful!

What other data sets can you put in BigQuery to join up?

Common data sets that people drive into their BigQuery databases are:

Google Adwords spend

Facebook spend

What happens as the data model evolves?

If we add new fields to the data model which would change the schema, UltraCart will automatically recreate your BigQuery table instead of just performing an update.

What about other data warehouse software other than BigQuery?

At this time the data warehouse functionality is limited to BigQuery. If in the future another popular cloud native data warehouse software comes along we will consider an integration with it.

Enhancing Tables with Views

One of the powerful mechanisms of BigQuery is the ability to create a view. A view is a query of an existing table that then acts like a new table for the purposes of querying data. This is a powerful concept and one that everyone should learn. Views are not copies of your data so they are as live and reactive to changes as the base table.

There are two scenarios that you want to use a view for:

Flatten down the complex data into a simpler form for the purposes of reporting on in tools like Google Data Studio of Power BI.

Adding additional calculations into the base table for further analysis.

Sample Queries

Important Note: If you’re going to write SQL queries with the nested data that is in the BigQuery tables, you’ll want to learn CROSS JOIN UNNEST and other array operations documented in the BigQuery documentation.

Adding Calculations to Auto Orders

The following query shows how to add:

Count of the number of rebills

Life time value of the customer

Average order value of the customer

How long the customer was active before the auto order was canceled or disabled

You can run this query to see the results. Then save this query as a view named “uc_auto_orders_with_stats”.

SELECT

-- Count number of rebills

ARRAY_LENGTH(rebill_orders) as number_of_rebills,

-- Life time value by adding original order plus rebill values

COALESCE(original_order.summary.total.value, 0) - COALESCE(original_order.summary.total_refunded.value, 0) +

COALESCE((

select SUM(COALESCE(r.summary.total.value, 0) - COALESCE(r.summary.total_refunded.value, 0)) from UNNEST(rebill_orders) as r

), 0) as life_time_value,

-- Average order value by adding original order plus rebill values then dividing by total number of orders

ROUND(

COALESCE(original_order.summary.total.value, 0) - COALESCE(original_order.summary.total_refunded.value, 0) +

COALESCE((

select SUM(COALESCE(r.summary.total.value, 0) - COALESCE(r.summary.total_refunded.value, 0)) from UNNEST(rebill_orders) as r

), 0) / (1 + ARRAY_LENGTH(rebill_orders))

,2) as average_order_value,

-- How long did the auto order last? Value will be null if still active

DATE_DIFF(COALESCE(canceled_dts, disabled_dts), original_order.creation_dts, DAY) as duration_days,

-- Retain all the other columns on uc_auto_orders

*

FROM `my-data-warehouse.my_dataset.uc_auto_orders`

Adding EST time zone dates to orders

BigQuery is going to treat all dates as the UTC time zone by default. This can cause some challenges when performing certain types of reports. The following query can be used to create a view “uc_orders_est” which contains fields that are shifted into the EST time zone.

select DATETIME(TIMESTAMP(o.creation_dts), "America/New_York") as creation_datetime_est, DATETIME(TIMESTAMP(o.payment.payment_dts), "America/New_York") as payment_datetime_est, DATETIME(TIMESTAMP(o.shipping.shipping_date), "America/New_York") as shipping_datetime_est, DATETIME(TIMESTAMP(o.refund_dts), "America/New_York") as refund_datetime_est, DATE(TIMESTAMP(o.creation_dts), "America/New_York") as creation_date_est, DATE(TIMESTAMP(o.payment.payment_dts), "America/New_York") as payment_date_est, DATE(TIMESTAMP(o.shipping.shipping_date), "America/New_York") as shipping_date_est, DATE(TIMESTAMP(o.refund_dts), "America/New_York") as refund_date_est, o.* from `my-data-warehouse.my_dataset.uc_orders` as o

Active Auto Order Next Rebill

SELECT auto_order_oid, auto_order_code, status, enabled, original_order.order_id as original_order_id, orders.current_stage as original_order_current_stage, original_order.billing.first_name, original_order.billing.last_name, original_order.billing.email, original_order.shipping.day_phone_e164, original_order.shipping.state_region, original_order.shipping.postal_code, original_order.shipping.country_code, item_offset, future_schedules.item_id as future_schedule_item_id, future_schedules.shipment_dts as future_schedule_shipment_dts, future_schedules.unit_cost as future_schedule_unit_cost, future_schedules.rebill_count as future_schedule_rebill_count, REGEXP_EXTRACT(failure_reason, '^[^\n]+') as failure_reason, FROM `my-data-warehouse.my_dataset.uc_auto_orders` as auto_orders JOIN `my-data-warehouse.my_dataset.uc_orders` as orders on orders.order_id = auto_orders.original_order_id, UNNEST(auto_orders.items) as items WITH OFFSET AS item_offset, UNNEST(items.future_schedules) as future_schedules WITH OFFSET AS future_schedule_offset WHERE enabled = true and future_schedule_offset = 0 and orders.payment.payment_dts is not null and orders.payment.payment_method <> 'Quote Request' and orders.current_stage <> 'Rejected' and (items.no_order_after_dts is null or items.no_order_after_dts >= current_date()) ORDER BY future_schedules.shipment_dts ASC

Revenue Per Item Over a Certain Time Period

SELECT i.merchant_item_id, sum(i.total_cost_with_discount.value) as revenue_for_item, sum(i.quantity) as units_sold FROM `my-data-warehouse.my_dataset.uc_orders` CROSS JOIN UNNEST(items) as i WHERE creation_dts between "2021-07-01" and "2021-08-01" and i.kit_component = false group by i.merchant_item_id order by i.merchant_item_id

In this query we’re interacting with the order table. We want to join the items (which are a nested array) as another table. We use the CROSS JOIN UNNEST operation to do this. Once we have the tables joined it’s a pretty standard group by and sum over type query.

Query Table Size in GB

select table_id, ROUND(sum(size_bytes) / (1024 * 1024), 2) as size_in_gb from `my-data-warehouse.my_dataset.__TABLES__` group by table_id

This query will show you the size of the tables you have in BigQuery.

Order ID Experiment Variations

SELECT order_id,

(

select params.value.text from UNNEST(events.params) as params where params.name = 'expid'

) as experiment_id,

(

select params.value.num from UNNEST(events.params) as params where params.name = 'expvid'

) as experiment_variation_number

FROM `my-data-warehouse.my_dataset.uc_storefront_recordings`

CROSS JOIN UNNEST(page_views) as page_views

CROSS JOIN UNNEST(page_views.events) as events

WHERE order_id is not null and events.name = 'experiment'

This query will show which experiment and variation each order went through (this requires screen recordings).

Orders For Specific Items Shipped By a User on a Specific Date

SELECT distinct order_id FROM `my-data-warehouse.my_dataset.ultracart_orders` CROSS JOIN UNNEST(items) as i WHERE DATE(TIMESTAMP(shipping.shipping_date), "America/New_York") = "2021-12-09" and i.shipped_by_user = 'bob' and i.merchant_item_id in ( 'PRODUCT-A', 'PRODUCT-B' ) order by order_id

Auto Orders with Pre-shipment Notice Date more than one week prior to shipment.

SELECT ao.original_order_id, DATE_DIFF(item.next_preshipment_notice_dts, item.next_shipment_dts, DAY) as days_diff FROM `my-data-warehouse.my_dataset.uc_auto_orders` as ao CROSS JOIN UNNEST(items) as item WHERE enabled = True and item.next_preshipment_notice_dts is not null and DATE_DIFF(item.next_preshipment_notice_dts, item.next_shipment_dts, DAY) < -7

Orders with a Specific Custom Field Value

SELECT order_id, payment.payment_status, creation_dts, checkout.custom_field3, checkout.custom_field4, checkout.custom_field5, checkout.custom_field6, checkout.custom_field7 FROM `my-data-warehouse.my_dataset.uc_orders` WHERE checkout.custom_field4 = '1234' ORDER BY order_id

List All Upsell Paths, Variation and Upsells

SELECT path.name as path_name, variation.name as variation_name, offer.name as offer_name

FROM `my-data-warehouse.my_dataset.uc_storefront_upsell_paths` as path

CROSS JOIN UNNEST(variations) as variation

CROSS JOIN UNNEST(visibility_ordered_offer_oids) as variation_offer

INNER JOIN `my-data-warehouse.my_dataset.uc_storefront_upsell_offers` as offer on

offer.storefront_upsell_offer_oid = variation_offer.value

where path.storefront_oid = 1234 -- Replace with your StoreFront OID

ORDER BY path.path_order

Upsell Screenshot List

SELECT

sf.host_name, path.name as path_name,

path_visibility_offer_offset + 1 as path_visibility_offer_offset,

variation.name as variation_name,

offer.name as offer_name,

offer.screenshot_small_full_length_url,

offer.screenshot_large_full_length_url

FROM `my-data-warehouse.my_dataset.uc_storefront_upsell_paths` as path

CROSS JOIN UNNEST(variations) as variation

CROSS JOIN UNNEST(visibility_ordered_offer_oids) as variation_offer WITH OFFSET AS path_visibility_offer_offset

INNER JOIN `my-data-warehouse.my_dataset.uc_storefront_upsell_offers` as offer on

offer.storefront_upsell_offer_oid = variation_offer.value

INNER JOIN `my-data-warehouse.my_dataset.uc_storefronts` as sf on sf.storefront_oid = path.storefront_oid

where offer.active = true and sf.locked = false

ORDER BY sf.host_name, path.path_order, path_visibility_offer_offset

Auto Orders with Missed Pre-shipment Notices

SELECT original_order_id, aoi.original_item_id, aoi.next_preshipment_notice_dts, aoi.preshipment_notice_sent, aoi.next_shipment_dts FROM `my-data-warehouse.my_dataset.uc_auto_orders` ao CROSS JOIN UNNEST(items) aoi where aoi.preshipment_notice_sent = false and aoi.next_preshipment_notice_dts is not null and aoi.next_preshipment_notice_dts < aoi.next_shipment_dts and aoi.next_preshipment_notice_dts < CURRENT_DATETIME() and (aoi.no_order_after_dts is null or aoi.no_order_after_dts > CURRENT_DATETIME()) and ao.enabled is true

Auto Order Logs Messages After Pre-shipment Notice and Before Shipment Should Take Place

SELECT original_order_id, aoi.original_item_id, aoi.next_preshipment_notice_dts, aoi.preshipment_notice_sent, aoi.next_shipment_dts,

ARRAY(

select AS STRUCT log_dts, log_message

from UNNEST(ao.logs)

where log_dts > aoi.next_preshipment_notice_dts

) as logs

FROM `my-data-warehouse.my_dataset.uc_auto_orders` ao

CROSS JOIN UNNEST(items) aoi

where aoi.preshipment_notice_sent = true

and aoi.next_preshipment_notice_dts is not null

and aoi.next_preshipment_notice_dts < aoi.next_shipment_dts

and aoi.next_preshipment_notice_dts < CURRENT_DATETIME()

and (aoi.no_order_after_dts is null or aoi.no_order_after_dts between DATETIME_TRUNC(CURRENT_DATETIME(), DAY) AND CURRENT_DATETIME())

and ao.enabled is true

Customer LTV Modeling

WITH email_first_rows as (

SELECT billing.email,

MIN(payment.payment_dts) as first_order_dts

FROM `my-data-warehouse.my_dataset.uc_orders`

WHERE billing.email <> '' and payment.payment_dts is not null

and current_stage in ('Shipping Department', 'Completed Order')

group by billing.email

),

order_rows as (

select

email_first_rows.email,

first_order_dts,

o2.payment.payment_dts,

o2.summary.total.value as total,

case when o2.payment.payment_dts <> first_order_dts then o2.summary.total.value else 0 end as additional_total_revenue,

DATE_DIFF(o2.payment.payment_dts, email_first_rows.first_order_dts, MONTH) as month

from email_first_rows

INNER JOIN `my-data-warehouse.my_dataset.uc_orders` o2 on o2.billing.email = email_first_rows.email

and o2.payment.payment_dts is not null and o2.current_stage in ('Shipping Department', 'Completed Order')

ORDER BY email_first_rows.email, month

),

month_rows as (

select email, month, min(payment_dts) as payment_dts, sum(additional_total_revenue) as additional_total_revenue,

sum(total) as total, count(*) as order_count from order_rows group by email, month

),

month_ltv as (

select email, month,

SUM(order_count) OVER (current_month_window) AS month_order_count,

SUM(order_count) OVER (months_since_first_order) AS cumuliative_order_count,

SUM(additional_total_revenue) OVER (current_month_window) AS month_additional_total_revenue,

SUM(additional_total_revenue) OVER (months_since_first_order) AS cumulative_additional_total_revenue,

SUM(total) OVER (current_month_window) AS month_total,

SUM(total) OVER (months_since_first_order) AS cumulative_total

from month_rows

WINDOW months_since_first_order AS (

PARTITION BY email ORDER BY payment_dts asc

ROWS BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW

),

current_month_window AS (

PARTITION BY email ORDER BY payment_dts asc

ROWS BETWEEN CURRENT ROW AND CURRENT ROW

)

order by email, month

)

select * from month_ltv

Find Order Id by Transaction Value

SELECT order_id FROM `my-data-warehouse.my_dataset.uc_orders` CROSS JOIN UNNEST(payment.transactions) t CROSS JOIN UNNEST(t.details) d WHERE d.value = 'abc123'

Auto Order Weekly Cohort by Main Item Id

WITH ao_rows as (

-- Group in weekly cohorts

SELECT DATE_TRUNC(original_order.creation_dts, WEEK) as weekly_cohort,

original_order_id,

-- Consider the first auto order item as the main subscription

items[safe_offset(0)].original_item_id as main_auto_order_item_id,

-- integer 1 = enabled 0 = disabled that can be summed

case when enabled then 1 else 0 end as enabled,

original_order.creation_dts,

-- has the original order been refunded?

case when original_order.summary.total_refunded.value > 0 then 1 else 0 end as original_order_refunded,

-- when was the original order refunded?

original_order.refund_dts as original_order_refund_dts,

-- how many unrefunded rebills have there been?

(

select count(*) from UNNEST(rebill_orders) as r where r.summary.total_refunded.value is null

) as rebill_order_unrefunded,

-- have any of the rebills been refunded?

coalesce((

select 1 from UNNEST(rebill_orders) as r where r.summary.total_refunded.value > 0 LIMIT 1

), 0) as rebill_order_refunded,

-- when was the most recent rebill refund

(

select max(refund_dts) from UNNEST(rebill_orders) as r where r.summary.total_refunded.value > 0

) as rebill_order_refund_dts,

-- how much unrefunded revenue has been generated?

(original_order.summary.total.value) as gross_original_total_revenue,

(coalesce(original_order.summary.total_refunded.value, 0)) as refunded_original_total_revenue,

(original_order.summary.total.value - coalesce(original_order.summary.total_refunded.value, 0)) as net_original_total_revenue,

-- how much unrefunded revenue has been generated?

(coalesce((

select sum(r.summary.total.value) from UNNEST(rebill_orders) as r

), 0)) as gross_rebill_total_revenue,

(coalesce((

select sum(coalesce(r.summary.total_refunded.value, 0)) from UNNEST(rebill_orders) as r

), 0)) as refunded_rebill_total_revenue,

(coalesce((

select sum(r.summary.total.value) - sum(coalesce(r.summary.total_refunded.value, 0)) from UNNEST(rebill_orders) as r

), 0)) as net_rebill_total_revenue,

-- how much unrefunded revenue has been generated?

(original_order.summary.total.value

+ coalesce((

select sum(r.summary.total.value) from UNNEST(rebill_orders) as r

), 0)) as gross_combined_total_revenue,

(coalesce(original_order.summary.total_refunded.value, 0)

+ coalesce((

select sum(coalesce(r.summary.total_refunded.value, 0)) from UNNEST(rebill_orders) as r

), 0)) as refunded_combined_total_revenue,

(original_order.summary.total.value - coalesce(original_order.summary.total_refunded.value, 0)

+ coalesce((

select sum(r.summary.total.value) - sum(coalesce(r.summary.total_refunded.value, 0)) from UNNEST(rebill_orders) as r

), 0)) as net_combined_total_revenue,

-- how many rebills

ARRAY_LENGTH(rebill_orders) as rebill_count

FROM `my-data-warehouse.my_dataset.uc_auto_orders`

-- Filter auto orders that had test orders that no longer exist

where original_order.creation_dts is not null

)

select

-- output by weekly cohort and main item id

weekly_cohort, main_auto_order_item_id,

-- how many auto orders in this group?

count(*) as auto_order_count,

-- how many are still enabled?

sum(enabled) as still_enabled_count,

-- how many have had the original a rebill order refunded?

sum(

case when original_order_refunded > 0 or rebill_order_refunded > 0 then 1 else 0 end

) as refund_count,

-- sum the various types of revenue

sum(gross_original_total_revenue) as gross_original_total_revenue,

sum(refunded_original_total_revenue) as refunded_original_total_revenue,

sum(net_original_total_revenue) as net_original_total_revenue,

sum(gross_rebill_total_revenue) as gross_rebill_total_revenue,

sum(refunded_rebill_total_revenue) as refunded_rebill_total_revenue,

sum(net_rebill_total_revenue) as net_rebill_total_revenue,

sum(gross_combined_total_revenue) as gross_combined_total_revenue,

sum(refunded_combined_total_revenue) as refunded_combined_total_revenue,

sum(net_combined_total_revenue) as net_combined_total_revenue,

-- what is the average number of rebills for people in this cohort?

trunc(avg(rebill_count), 2) as average_number_of_rebills_per_auto_order

from ao_rows

group by weekly_cohort, main_auto_order_item_id

order by weekly_cohort desc, main_auto_order_item_id

Auto Order Future Predictions

WITH future_shipment_rows as ( SELECT auto_order_code, fs.item_id, fs.unit_cost, coalesce(i.arbitrary_quantity, i.original_quantity) as qty, fs.shipment_dts, original_order.billing.email_hash FROM `my-data-warehouse.my_dataset.uc_auto_orders` CROSS JOIN UNNEST(items) as i CROSS JOIN UNNEST(i.future_schedules) fs where fs.shipment_dts < DATETIME_ADD(CURRENT_DATE(), INTERVAL 90 day) and enabled is true ), future_email_rows as ( select email_hash, sum(unit_cost * qty) as future_item_revenue from future_shipment_rows group by email_hash order by email_hash ), future_item_day_rows as ( select item_id,DATETIME_TRUNC(shipment_dts, day) as day, sum(qty) as future_quantity, sum(unit_cost * qty) as future_item_revenue from future_shipment_rows group by item_id, day order by item_id, day ), future_item_month_rows as ( select item_id,DATETIME_TRUNC(shipment_dts, month) as month, sum(qty) as future_quantity, sum(unit_cost * qty) as future_item_revenue from future_shipment_rows group by item_id, month order by item_id, month ) select * from future_item_day_rows

Extract a Marketing List from Order History

SELECT order_id, marketing.mailing_list, billing.*, shipping.* FROM `my-data-warehouse.my_dataset.uc_orders`

Auto Order Churn Overall

WITH auto_order_rows as (

SELECT

auto_order_oid,

CAST(DATETIME_TRUNC(original_order.creation_dts, day) as DATE) as start_dts,

CAST(DATETIME_TRUNC(coalesce(canceled_dts, disabled_dts), day) as DATE) as churn_dts

FROM `my-data-warehouse.my_dataset.uc_auto_orders`

where original_order.current_stage not in ('REJ') and original_order.payment.payment_dts is not null and original_order.payment.test_order is false

),

daily_date_range_rows as (

SELECT date

FROM UNNEST(

GENERATE_DATE_ARRAY((select min(start_dts) from auto_order_rows), CURRENT_DATE(), INTERVAL 1 DAY)

) as date

),

daily_rows as (

select *,

(

select count(*) from auto_order_rows

where start_dts < date and (churn_dts is null or churn_dts >= date)

) as active_at_start,

(

select count(*) from auto_order_rows where

churn_dts = date

) as churned_today,

(

select count(*) from auto_order_rows where

start_dts = date

) as added_today,

(

select count(*) from auto_order_rows

where start_dts <= date and (churn_dts is null or churn_dts > date)

) as active_at_end

from daily_date_range_rows

order by date

),

monthly_date_range_rows as (

SELECT first_of_month, DATE_SUB(DATE_ADD(first_of_month, INTERVAL 1 MONTH), INTERVAL 1 DAY) as end_of_month

FROM UNNEST(

GENERATE_DATE_ARRAY(DATE_TRUNC((select min(start_dts) from auto_order_rows), MONTH), CURRENT_DATE(), INTERVAL 1 MONTH)

) as first_of_month

),

period_rows as (

select

first_of_month as period_start_date,

LEAST(end_of_month, CURRENT_DATE()) as period_end_date,

DATE_DIFF(LEAST(end_of_month, CURRENT_DATE()), first_of_month, day) + 1 as days_in_period

from monthly_date_range_rows

),

daily_rows_in_period as (

select *,

case when period_start_date = daily_rows.date then active_at_start else null end as period_active_at_start,

case when period_end_date = daily_rows.date then active_at_end else null end as period_active_at_end

from period_rows

left join daily_rows on daily_rows.date between period_rows.period_start_date and period_rows.period_end_date

order by period_rows.period_start_date, daily_rows.date

),

churn_stats as (

select

period_start_date as month,

--period_end_date,

days_in_period,

coalesce(max(period_active_at_start), 0) as period_starting_active,

sum(added_today) as added_in_period,

sum(churned_today) as churned_in_period,

coalesce(max(period_active_at_end), 0) as period_ending_active,

ROUND((SAFE_DIVIDE(sum(churned_today), sum(active_at_start))) * days_in_period * 100, 2) as churn_percentage

from daily_rows_in_period

group by period_start_date, period_end_date, days_in_period

order by period_start_date, period_end_date

)

select * except(days_in_period),

case

when added_in_period > churned_in_period then 'growing'

when added_in_period < churned_in_period then 'shrinking'

else ''

end as outcome

from churn_stats where churn_percentage is not null order by month desc

Auto Order Churn by Item

WITH auto_order_rows as (

SELECT

auto_order_oid,

items[SAFE_OFFSET(0)].original_item_id as primary_item_id,

CAST(DATETIME_TRUNC(original_order.creation_dts, day) as DATE) as start_dts,

CAST(DATETIME_TRUNC(coalesce(canceled_dts, disabled_dts), day) as DATE) as churn_dts

FROM `my-data-warehouse.my_dataset.uc_auto_orders`

where original_order.current_stage not in ('REJ') and original_order.payment.payment_dts is not null and original_order.payment.test_order is false

),

primary_item_id_rows as (

select distinct primary_item_id from auto_order_rows order by primary_item_id

),

daily_date_range_rows as (

SELECT date

FROM UNNEST(

GENERATE_DATE_ARRAY((select min(start_dts) from auto_order_rows), CURRENT_DATE(), INTERVAL 1 DAY)

) as date

),

item_daily_date_range_rows as (

select * from primary_item_id_rows

right join daily_date_range_rows on 1 = 1

),

item_daily_rows as (

select *,

(

select count(*) from auto_order_rows

where auto_order_rows.primary_item_id = item_daily_date_range_rows.primary_item_id and

start_dts <= date and (churn_dts is null or churn_dts >= date)

) as active_at_start,

(

select count(*) from auto_order_rows where

auto_order_rows.primary_item_id = item_daily_date_range_rows.primary_item_id and

churn_dts = date

) as churned_today,

(

select count(*) from auto_order_rows where

auto_order_rows.primary_item_id = item_daily_date_range_rows.primary_item_id and

start_dts = date

) as added_today

from item_daily_date_range_rows

order by date

),

monthly_date_range_rows as (

SELECT first_of_month, DATE_SUB(DATE_ADD(first_of_month, INTERVAL 1 MONTH), INTERVAL 1 DAY) as end_of_month

FROM UNNEST(

GENERATE_DATE_ARRAY(DATE_TRUNC((select min(start_dts) from auto_order_rows), MONTH), CURRENT_DATE(), INTERVAL 1 MONTH)

) as first_of_month

),

period_rows as (

select

first_of_month as period_start_date,

LEAST(end_of_month, CURRENT_DATE()) as period_end_date,

DATE_DIFF(LEAST(end_of_month, CURRENT_DATE()), first_of_month, day) + 1 as days_in_period

from monthly_date_range_rows

),

item_daily_rows_in_period as (

select *,

case when period_start_date = item_daily_rows.date then active_at_start else null end as period_active_at_start,

case when period_end_date = item_daily_rows.date then active_at_start - churned_today else null end as period_active_at_end

from period_rows

left join item_daily_rows on item_daily_rows.date between period_rows.period_start_date and period_rows.period_end_date

order by period_rows.period_start_date, item_daily_rows.date

),

churn_stats as (

select primary_item_id, period_start_date, period_end_date, days_in_period,

max(period_active_at_start) period_starting_active,

sum(added_today) as added_in_period,

sum(churned_today) as churned_in_period,

max(period_active_at_end) period_ending_active,

ROUND((SAFE_DIVIDE(sum(churned_today), sum(active_at_start))) * days_in_period * 100, 2) as churn_percentage

from item_daily_rows_in_period

group by primary_item_id, period_start_date, period_end_date, days_in_period

order by primary_item_id, period_start_date, period_end_date

)

select * except(days_in_period),

case

when added_in_period > churned_in_period then 'growing'

when added_in_period < churned_in_period then 'shrinking'

else ''

end as outcome

from churn_stats where churn_percentage is not null

Affiliate Metrics

WITH

email_affiliate_owner_rows as (

select billing.email_hash,

creation_dts,

affiliates[SAFE_OFFSET(0)].affiliate_oid

from `my-data-warehouse.my_dataset.uc_orders`

where

affiliates[SAFE_OFFSET(0)].affiliate_oid is not null and billing.email_hash is not null

-- and payment.payment_dts >= @start_date and payment.payment_dts <= @end_date

ORDER BY creation_dts desc

),

repeat_customer_orders as (

select

o1.order_id,

o1.billing.email_hash,

o1.summary.total.value - o1.summary.tax.value as gross_revenue,

COALESCE(o1.summary.total_refunded.value, 0) - COALESCE(o1.summary.tax_refunded.value, 0) as gross_refunded,

(

select sum(cogs * quantity) from UNNEST(o1.items)

) as item_cogs,

COALESCE(o1.summary.actual_shipping.value, 0) as actual_shipping,

COALESCE(o1.summary.actual_fulfillment.value, 0) as actual_fulfillment,

o1.items[SAFE_OFFSET(0)].merchant_item_id as primary_item_id,

email_affiliate_owner_rows.affiliate_oid,

COALESCE((

select sum(transaction_amount)

from UNNEST(o1.affiliates[SAFE_OFFSET(0)].ledger_entries)

), 0) as affiliate_commission,

0 as active_subscription,

o1.creation_dts,

'repeat customer' as order_type,

row_number() over (partition by order_id order by email_affiliate_owner_rows.creation_dts desc) as rn

from `my-data-warehouse.my_dataset.uc_orders` o1

join email_affiliate_owner_rows on email_affiliate_owner_rows.email_hash = o1.billing.email_hash and email_affiliate_owner_rows.creation_dts <= o1.creation_dts

where

-- not an auto order and no affiliate associated

o1.auto_order is null and o1.affiliates[SAFE_OFFSET(0)].affiliate_oid is null

--and o1.payment.payment_dts >= @start_date and o1.payment.payment_dts <= @end_date

),

-- Calculate values per individual orders

order_rows as (

-- Straight sale or original rebills

SELECT

order_id,

billing.email_hash,

summary.total.value - summary.tax.value as gross_revenue,

COALESCE(summary.total_refunded.value, 0) - COALESCE(summary.tax_refunded.value, 0) as gross_refunded,

(

select sum(cogs * quantity) from UNNEST(items)

) as item_cogs,

COALESCE(summary.actual_shipping.value, 0) as actual_shipping,

COALESCE(summary.actual_fulfillment.value, 0) as actual_fulfillment,

o.items[SAFE_OFFSET(0)].merchant_item_id as primary_item_id,

o.affiliates[SAFE_OFFSET(0)].affiliate_oid as affiliate_oid,

COALESCE((

select sum(transaction_amount)

from UNNEST(o.affiliates[SAFE_OFFSET(0)].ledger_entries)

), 0) as affiliate_commission,

-- If this is the original order then see if it's an active subscription

case

when order_id = auto_order.original_order_id and auto_order.enabled is true then 1

else 0

end as active_subscription,

creation_dts,

case when auto_order is null then 'straight sale' else 'auto order original' end as order_type

FROM `my-data-warehouse.my_dataset.uc_orders` o

WHERE current_stage in ('Shipping Department', 'Completed Order')

and (auto_order is null or order_id = auto_order.original_order_id)

--and payment.payment_dts >= @start_date and payment.payment_dts <= @end_date

union all

-- rebill orders

select

o1.order_id,

o1.billing.email_hash,

o1.summary.total.value - o1.summary.tax.value as gross_revenue,

COALESCE(o1.summary.total_refunded.value, 0) - COALESCE(o1.summary.tax_refunded.value, 0) as gross_refunded,

(

select sum(cogs * quantity) from UNNEST(o1.items)

) as item_cogs,

COALESCE(o1.summary.actual_shipping.value, 0) as actual_shipping,

COALESCE(o1.summary.actual_fulfillment.value, 0) as actual_fulfillment,

o1.items[SAFE_OFFSET(0)].merchant_item_id as primary_item_id,

o2.affiliates[SAFE_OFFSET(0)].affiliate_oid as affiliate_oid,

COALESCE((

select sum(transaction_amount)

from UNNEST(o1.affiliates[SAFE_OFFSET(0)].ledger_entries)

), 0) as affiliate_commission,

0 as active_subscription,

o1.creation_dts,

'auto order rebill' as order_type

from `my-data-warehouse.my_dataset.uc_orders` o1

join `my-data-warehouse.my_dataset.uc_orders` o2 on o1.auto_order.original_order_id = o2.order_id

where o1.auto_order.original_order_id != o1.order_id

--and o2.payment.payment_dts >= @start_date and o2.payment.payment_dts <= @end_date

and o2.affiliates[SAFE_OFFSET(0)].affiliate_oid is not null

union all

select * except (rn) from repeat_customer_orders where rn = 1

ORDER BY creation_dts desc

),

-- Join in the affiliate information and calculate the profit

order_by_affiliate_rows as (

select order_rows.*, aff.email_hash as aff_email_hash,

gross_revenue - gross_refunded - item_cogs - actual_shipping - actual_fulfillment - affiliate_commission as profit

from order_rows

LEFT OUTER JOIN `my-data-warehouse.my_dataset.uc_affiliates` aff on aff.affiliate_oid = order_rows.affiliate_oid

),

-- Per affiliate/customer email sum up the revenue, profit and count of orders

order_by_affiliate_email_rows as (

select

affiliate_oid,

email_hash,

sum(gross_revenue) as gross_revenue,

sum(profit) as profit,

count(*) as order_count,

sum(active_subscription) as active_subscriptions

from order_by_affiliate_rows

group by affiliate_oid, email_hash

),

-- Per item sum up the revenue, profit and count of orders

order_by_primary_item_rows as (

select

primary_item_id,

sum(gross_revenue) as gross_revenue,

sum(profit) as profit,

count(*) as order_count,

sum(active_subscription) as active_subscriptions

from order_by_affiliate_rows

group by primary_item_id

order by primary_item_id

),

-- Calculate the metrics per affiliate

affiliate_stat_rows as (

select

affiliate_oid,

ROUND(avg(gross_revenue),2) as avg_gross_revenue_per_customer,

ROUND(avg(profit),2) as avg_profit_per_customer,

ROUND(avg(order_count),2) as avg_orders_per_customer,

sum(order_count) as sum_order_count,

sum(gross_revenue) as sum_gross_revenue,

sum(profit) as sum_profit,

sum(active_subscriptions) as sum_active_subscriptions

from order_by_affiliate_email_rows

where affiliate_oid is not null

group by affiliate_oid

order by affiliate_oid

),

-- Calculate the metrics per affiliate / item id

affiliate_primary_item_id_stat_rows as (

select

affiliate_oid,

primary_item_id,

ROUND(avg(gross_revenue),2) as avg_gross_revenue_per_customer,

ROUND(avg(profit),2) as avg_profit_per_customer,

count(*) as order_count,

sum(gross_revenue) as sum_gross_revenue,

sum(profit) as sum_profit,

sum(active_subscription) as sum_active_subscriptions

from order_by_affiliate_rows

where affiliate_oid is not null

group by affiliate_oid, primary_item_id

order by affiliate_oid, primary_item_id

),

-- Calculate the metrics overall

overall_stat_rows as (

select

COUNT(DISTINCT affiliate_oid) as number_of_affiliates,

ROUND(avg(gross_revenue),2) as avg_gross_revenue_per_customer,

ROUND(avg(profit),2) as avg_profit_per_customer,

ROUND(avg(order_count),2) as avg_orders_per_customer,

sum(order_count) as sum_order_count,

sum(gross_revenue) as sum_gross_revenue,

sum(profit) as sum_profit,

sum(active_subscriptions) as sum_active_subscriptions

from order_by_affiliate_email_rows

where affiliate_oid is not null

)

-- Five different data sets that can be viewed

select * from overall_stat_rows

-- select * from affiliate_stat_rows

-- select * from affiliate_primary_item_id_stat_rows

-- select * from order_by_primary_item_rows

-- select * except (email_hash, aff_email_hash) from order_by_affiliate_rows where affiliate_oid is not null

Customer Cohort Revenue

with

customer_bulk_rows as (

-- everythign is grouped by hashed email to count as a unique customer

select billing.email_hash,

-- initial items on the order (except kit components)

ARRAY(

select as struct item.merchant_item_id from UNNEST(o1.items) item where kit_component is false

) as initial_item_ids,

-- advertising sources

(

select value from UNNEST(properties) where name = 'ucasource'

) as utm_source,

(

select value from UNNEST(properties) where name = 'ucacampaign'

) as utm_campaign,

affiliates[SAFE_OFFSET(0)].affiliate_oid,

-- core values about each order that we want in an array.

ARRAY(

select as struct order_id, summary.total.value as total_revenue, creation_dts, cast(creation_dts as date) as creation_date

from `my-data-warehouse.my_dataset.uc_orders` o2 where o2.billing.email_hash = o1.billing.email_hash

) as order_array,

-- clever use of run numbering which will be filtered in the next query to prevent correlation error

ROW_NUMBER() OVER ( PARTITION BY billing.email_hash ORDER BY creation_dts ) AS rownum,

from `my-data-warehouse.my_dataset.uc_orders` o1

where billing.email_hash is not null

order by billing.email_hash

),

customer_rows as (

select *,

-- calculate their cohort startin group

(

select DATE_TRUNC(min(creation_date), MONTH) from UNNEST(order_array)

) as cohort_group,

-- calculate the last period they had any activity

(

select DATE_TRUNC(max(creation_date), MONTH) from UNNEST(order_array)

) as end_period_start_of_month

from customer_bulk_rows

-- clever filtering to the first row number

where rownum = 1

),

monthly_date_range_rows as (

-- generate monthly periods since our first order

SELECT first_of_month, DATE_SUB(DATE_ADD(first_of_month, INTERVAL 1 MONTH), INTERVAL 1 DAY) as end_of_month

FROM UNNEST(

GENERATE_DATE_ARRAY(DATE_TRUNC((select cast(min(creation_dts) as date) from `my-data-warehouse.my_dataset.uc_orders`), MONTH), CURRENT_DATE(), INTERVAL 1 MONTH)

) as first_of_month

),

customer_cohort_intermediate_rows as (

-- grab the main data, but strip off columns we don't need to output

select * except (order_array, end_period_start_of_month, rownum),

-- roll up LTV across all the orders

(

select sum(total_revenue) from UNNEST(order_array)

) as ltv,

-- generate the periods

array (

select as struct

-- nice incremently number by period

ROW_NUMBER() OVER ( PARTITION BY email_hash ) AS period_number,

-- date range of the period

period.first_of_month, period.end_of_month,

-- number of orders in the period

count(distinct(order_id)) as period_order_count,

-- revenue in the period

coalesce(sum(total_revenue), 0) as period_total_revenue,

-- array of orders that occurred during this period

(

SELECT ARRAY_AGG(t)

FROM (SELECT DISTINCT * FROM UNNEST(order_array) v where v.creation_date between period.first_of_month and period.end_of_month) t

) as orders

from UNNEST(order_array) o

RIGHT OUTER JOIN monthly_date_range_rows period on o.creation_dts between period.first_of_month and period.end_of_month

-- only look at periods that are between their first and last order

where period.first_of_month >= cohort_group and period.first_of_month <= end_period_start_of_month

group by period.first_of_month, period.end_of_month

) as periods

from customer_rows

order by cohort_group, email_hash

),

customer_cohort_rows as (

select *,

-- add in a count of the number of total periods

ARRAY_LENGTH(periods) as period_count

from customer_cohort_intermediate_rows

),

customer_cohort_agg_rows as (

select cohort_group, p.period_number, sum(p.period_total_revenue) as total_revenue

from customer_cohort_rows

CROSS JOIN UNNEST(periods) p

-- TODO: This is where you would filter down customers based upon the traffic source, affiliate id or initial item id

-- where utm_source = 'google'

group by cohort_group, p.period_number

order by cohort_group, p.period_number

)

-- Output a pivoted result of the revenue for each cohort group over the first 12 months for those customers

select *

from customer_cohort_agg_rows

PIVOT(sum(total_revenue) as period_total_revenue FOR period_number IN (

1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12

))

-- Start from a particular month and go forward

where cohort_group >= cast('2021-01-01' as date)

order by cohort_group

Auto Order Cohort Revenue

with

customer_bulk_rows as (

-- everythign is grouped by hashed email to count as a unique customer

select auto_order_oid as customer_key,

-- initial items on the order (except kit components)

ARRAY(

select as struct item.original_item_id from UNNEST(ao1.items) item

) as initial_item_ids,

-- advertising sources

(

select value from UNNEST(ao1.original_order.properties) where name = 'ucasource'

) as utm_source,

(

select value from UNNEST(ao1.original_order.properties) where name = 'ucacampaign'

) as utm_campaign,

ao1.original_order.affiliates[SAFE_OFFSET(0)].affiliate_oid,

-- core values about each order that we want in an array.

ARRAY(

select as struct ao1.original_order.order_id, ao1.original_order.summary.total.value as total_revenue, ao1.original_order.creation_dts, cast(ao1.original_order.creation_dts as date) as creation_date

union all

select as struct order_id, summary.total.value as total_revenue, creation_dts, cast(creation_dts as date) as creation_date

from ao1.rebill_orders

) as order_array,

-- clever use of run numbering which will be filtered in the next query to prevent correlation error

ROW_NUMBER() OVER ( PARTITION BY ao1.auto_order_oid ) AS rownum,

from `my-data-warehouse.my_dataset.uc_auto_orders` ao1

order by auto_order_oid

),

customer_rows as (

select *,

-- calculate their cohort startin group

(

select DATE_TRUNC(min(creation_date), MONTH) from UNNEST(order_array)

) as cohort_group,

-- calculate the last period they had any activity

(

select DATE_TRUNC(max(creation_date), MONTH) from UNNEST(order_array)

) as end_period_start_of_month

from customer_bulk_rows

-- clever filtering to the first row number

where rownum = 1

),

monthly_date_range_rows as (

-- generate monthly periods since our first order

SELECT first_of_month, DATE_SUB(DATE_ADD(first_of_month, INTERVAL 1 MONTH), INTERVAL 1 DAY) as end_of_month

FROM UNNEST(

GENERATE_DATE_ARRAY(DATE_TRUNC((select cast(min(original_order.creation_dts) as date) from `my-data-warehouse.my_dataset.uc_auto_orders`), MONTH), CURRENT_DATE(), INTERVAL 1 MONTH)

) as first_of_month

),

customer_cohort_intermediate_rows as (

-- grab the main data, but strip off columns we don't need to output

select * except (order_array, end_period_start_of_month, rownum),

-- roll up LTV across all the orders

(

select sum(total_revenue) from UNNEST(order_array)

) as ltv,

-- generate the periods

array (

select as struct

-- nice incremently number by period

ROW_NUMBER() OVER ( PARTITION BY customer_key ) AS period_number,

-- date range of the period

period.first_of_month, period.end_of_month,

-- number of orders in the period

count(distinct(order_id)) as period_order_count,

-- revenue in the period

coalesce(sum(total_revenue), 0) as period_total_revenue,

-- array of orders that occurred during this period

(

SELECT ARRAY_AGG(t)

FROM (SELECT DISTINCT * FROM UNNEST(order_array) v where v.creation_date between period.first_of_month and period.end_of_month) t

) as orders

from UNNEST(order_array) o

RIGHT OUTER JOIN monthly_date_range_rows period on o.creation_dts between period.first_of_month and period.end_of_month

-- only look at periods that are between their first and last order

where period.first_of_month >= cohort_group and period.first_of_month <= end_period_start_of_month

group by period.first_of_month, period.end_of_month

) as periods

from customer_rows

order by cohort_group, customer_key

),

customer_cohort_rows as (

select *,

-- add in a count of the number of total periods

ARRAY_LENGTH(periods) as period_count

from customer_cohort_intermediate_rows

),

customer_cohort_agg_rows as (

select cohort_group, p.period_number, sum(p.period_total_revenue) as total_revenue

from customer_cohort_rows

CROSS JOIN UNNEST(periods) p

-- TODO: This is where you would filter down customers based upon the traffic source, affiliate id or initial item id

-- where utm_source = 'google'

group by cohort_group, p.period_number

order by cohort_group, p.period_number

)

-- Output a pivoted result of the revenue for each cohort group over the first 12 months for those customers

select *

from customer_cohort_agg_rows

PIVOT(sum(total_revenue) as period_total_revenue FOR period_number IN (

1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12

))

-- Start from a particular month and go forward

where cohort_group >= cast('2021-01-01' as date)

order by cohort_group

Auto Order Cohort Active Count

with

customer_bulk_rows as (

-- everything is grouped by hashed email to count as a unique customer

select auto_order_oid as customer_key,

-- initial items on the order (except kit components)

ARRAY(

select as struct item.original_item_id from UNNEST(ao1.items) item

) as initial_item_ids,

-- advertising sources

(

select value from UNNEST(ao1.original_order.properties) where name = 'ucasource'

) as utm_source,

(

select value from UNNEST(ao1.original_order.properties) where name = 'ucacampaign'

) as utm_campaign,

ao1.original_order.affiliates[SAFE_OFFSET(0)].affiliate_oid,

-- is the auto order still active

DATE_TRUNC(coalesce(ao1.disabled_dts, ao1.canceled_dts), day) as churn_date,

-- core values about each order that we want in an array.

ARRAY(

select as struct ao1.original_order.order_id, ao1.original_order.summary.total.value as total_revenue, ao1.original_order.creation_dts, cast(ao1.original_order.creation_dts as date) as creation_date

union all

select as struct order_id, summary.total.value as total_revenue, creation_dts, cast(creation_dts as date) as creation_date

from ao1.rebill_orders

) as order_array,

-- clever use of run numbering which will be filtered in the next query to prevent correlation error

ROW_NUMBER() OVER ( PARTITION BY ao1.auto_order_oid ) AS rownum,

from `my-data-warehouse.my_dataset.uc_auto_orders` ao1

order by auto_order_oid

),

customer_rows as (

select *,

-- calculate their cohort startin group

(

select DATE_TRUNC(min(creation_date), MONTH) from UNNEST(order_array)

) as cohort_group,

-- calculate the last period they had any activity

DATE_TRUNC(CURRENT_DATE(), MONTH) as end_period_start_of_month

from customer_bulk_rows

-- clever filtering to the first row number

where rownum = 1

),

monthly_date_range_rows as (

-- generate monthly periods since our first order

SELECT first_of_month, DATE_SUB(DATE_ADD(first_of_month, INTERVAL 1 MONTH), INTERVAL 1 DAY) as end_of_month

FROM UNNEST(

GENERATE_DATE_ARRAY(DATE_TRUNC((select cast(min(original_order.creation_dts) as date) from `my-data-warehouse.my_dataset.uc_auto_orders`), MONTH), CURRENT_DATE(), INTERVAL 1 MONTH)

) as first_of_month

),

customer_cohort_intermediate_rows as (

-- grab the main data, but strip off columns we don't need to output

select * except (order_array, end_period_start_of_month, rownum),

-- roll up LTV across all the orders

(

select sum(total_revenue) from UNNEST(order_array)

) as ltv,

-- generate the periods

array (

select as struct

-- nice incremently number by period

ROW_NUMBER() OVER ( PARTITION BY customer_key ) AS period_number,

-- date range of the period

period.first_of_month, period.end_of_month,

-- number of orders in the period

count(distinct(order_id)) as period_order_count,

-- revenue in the period

coalesce(sum(total_revenue), 0) as period_total_revenue,

-- active still during this period

case when churn_date is null then 1

when churn_date < period.first_of_month then 0

when churn_date >= period.first_of_month then 1

else 0

end as active_during_period,

-- array of orders that occurred during this period

(

SELECT ARRAY_AGG(t)

FROM (SELECT DISTINCT * FROM UNNEST(order_array) v where v.creation_date between period.first_of_month and period.end_of_month) t

) as orders

from UNNEST(order_array) o

RIGHT OUTER JOIN monthly_date_range_rows period on o.creation_dts between period.first_of_month and period.end_of_month

-- only look at periods that are between their first and last order

where period.first_of_month >= cohort_group and period.first_of_month <= end_period_start_of_month

group by period.first_of_month, period.end_of_month

) as periods

from customer_rows

order by cohort_group, customer_key

),

customer_cohort_rows as (

select *,

-- add in a count of the number of total periods

ARRAY_LENGTH(periods) as period_count

from customer_cohort_intermediate_rows

),

customer_cohort_agg_rows as (

select cohort_group, p.period_number, sum(p.active_during_period) as active_auto_order_count

from customer_cohort_rows

CROSS JOIN UNNEST(periods) p

-- TODO: This is where you would filter down customers based upon the traffic source, affiliate id or initial item id

-- where utm_source = 'google'

group by cohort_group, p.period_number

order by cohort_group, p.period_number

),

period_rows as (

-- Output a pivoted result of the revenue for each cohort group over the first 24 months for those auto order cohorts

select *

from customer_cohort_agg_rows

PIVOT(sum(active_auto_order_count) as period_active_auto_order_count FOR period_number IN (

1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24

))

-- Start from a particular month and go forward

where cohort_group >= cast('2021-01-01' as date)

order by cohort_group

)

select * from period_rows

Auto Order Cohort Active Percentage

with

customer_bulk_rows as (

-- everything is grouped by hashed email to count as a unique customer

select auto_order_oid as customer_key,

-- initial items on the order (except kit components)

ARRAY(

select as struct item.original_item_id from UNNEST(ao1.items) item

) as initial_item_ids,

-- advertising sources

(

select value from UNNEST(ao1.original_order.properties) where name = 'ucasource'

) as utm_source,

(

select value from UNNEST(ao1.original_order.properties) where name = 'ucacampaign'

) as utm_campaign,

ao1.original_order.affiliates[SAFE_OFFSET(0)].affiliate_oid,

-- is the auto order still active

DATE_TRUNC(coalesce(ao1.disabled_dts, ao1.canceled_dts), day) as churn_date,

-- core values about each order that we want in an array.

ARRAY(

select as struct ao1.original_order.order_id, ao1.original_order.summary.total.value as total_revenue, ao1.original_order.creation_dts, cast(ao1.original_order.creation_dts as date) as creation_date

union all

select as struct order_id, summary.total.value as total_revenue, creation_dts, cast(creation_dts as date) as creation_date

from ao1.rebill_orders

) as order_array,

-- clever use of run numbering which will be filtered in the next query to prevent correlation error

ROW_NUMBER() OVER ( PARTITION BY ao1.auto_order_oid ) AS rownum,

from `my-data-warehouse.my_dataset.uc_auto_orders` ao1

order by auto_order_oid

),

customer_rows as (

select *,

-- calculate their cohort startin group

(

select DATE_TRUNC(min(creation_date), MONTH) from UNNEST(order_array)

) as cohort_group,

-- calculate the last period they had any activity

DATE_TRUNC(CURRENT_DATE(), MONTH) as end_period_start_of_month

from customer_bulk_rows

-- clever filtering to the first row number

where rownum = 1

),

monthly_date_range_rows as (

-- generate monthly periods since our first order

SELECT first_of_month, DATE_SUB(DATE_ADD(first_of_month, INTERVAL 1 MONTH), INTERVAL 1 DAY) as end_of_month

FROM UNNEST(

GENERATE_DATE_ARRAY(DATE_TRUNC((select cast(min(original_order.creation_dts) as date) from `my-data-warehouse.my_dataset.uc_auto_orders`), MONTH), CURRENT_DATE(), INTERVAL 1 MONTH)

) as first_of_month

),

customer_cohort_intermediate_rows as (

-- grab the main data, but strip off columns we don't need to output

select * except (order_array, end_period_start_of_month, rownum),

-- roll up LTV across all the orders

(

select sum(total_revenue) from UNNEST(order_array)

) as ltv,

-- generate the periods

array (

select as struct

-- nice incremently number by period

ROW_NUMBER() OVER ( PARTITION BY customer_key ) AS period_number,

-- date range of the period

period.first_of_month, period.end_of_month,

-- number of orders in the period

count(distinct(order_id)) as period_order_count,

-- revenue in the period

coalesce(sum(total_revenue), 0) as period_total_revenue,

-- active still during this period

case when churn_date is null then 1

when churn_date < period.first_of_month then 0

when churn_date >= period.first_of_month then 1

else 0

end as active_during_period,

-- array of orders that occurred during this period

(

SELECT ARRAY_AGG(t)

FROM (SELECT DISTINCT * FROM UNNEST(order_array) v where v.creation_date between period.first_of_month and period.end_of_month) t

) as orders

from UNNEST(order_array) o

RIGHT OUTER JOIN monthly_date_range_rows period on o.creation_dts between period.first_of_month and period.end_of_month

-- only look at periods that are between their first and last order

where period.first_of_month >= cohort_group and period.first_of_month <= end_period_start_of_month

group by period.first_of_month, period.end_of_month

) as periods

from customer_rows

order by cohort_group, customer_key

),

customer_cohort_rows as (

select *,

-- add in a count of the number of total periods

ARRAY_LENGTH(periods) as period_count

from customer_cohort_intermediate_rows

),

customer_cohort_agg_rows as (

select cohort_group, p.period_number, sum(p.active_during_period) as active_auto_order_count

from customer_cohort_rows

CROSS JOIN UNNEST(periods) p

-- TODO: This is where you would filter down customers based upon the traffic source, affiliate id or initial item id

-- where utm_source = 'google'

group by cohort_group, p.period_number

order by cohort_group, p.period_number

),

customer_cohort_agg_rows2 as (

select cohort_group, period_number, ROUND(SAFE_DIVIDE(active_auto_order_count,

(

select max(active_auto_order_count) from customer_cohort_agg_rows ccar2 where ccar2.cohort_group = ccar1.cohort_group

)) * 100.0, 1) as active_percentage

from customer_cohort_agg_rows ccar1

order by cohort_group, period_number

),

period_rows as (

-- Output a pivoted result of the revenue for each cohort group over the first 24 months for those auto order cohorts

select *

from customer_cohort_agg_rows2

PIVOT(sum(active_percentage) as period_active_auto_order_percentage FOR period_number IN (

1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24

))

-- Start from a particular month and go forward

where cohort_group >= cast('2021-01-01' as date)

order by cohort_group

)

select * from period_rows

Order Upsells

Which upsells were seen by an order and what were the outcomes?

WITH path_offers as (

select sup.name as path_name, supvo.value as storefront_upsell_offer_oid

from `ultracart_dw.uc_storefront_upsell_paths` sup

CROSS JOIN UNNEST(variations) supv

CROSS JOIN UNNEST(visibility_ordered_offer_oids) supvo

),

order_upsell_rows as (

select

po.path_name,

suo.name as offer_name,

upsell_item_ids[SAFE_OFFSET(0)].value as upsell_item_id, suoe.order_id,

case

when successful_charge = 1 then true

else false

end as took_upsell,

revenue,

profit,

screen_size,

quantity,

refund_quantity,

o.creation_dts as order_dts

from `ultracart_dw.uc_storefront_upsell_offer_events` suoe

LEFT JOIN `ultracart_dw.uc_storefront_upsell_offers` suo on suo.storefront_upsell_offer_oid = suoe.storefront_upsell_offer_oid

LEFT JOIN `path_offers` po on po.storefront_upsell_offer_oid = suo.storefront_upsell_offer_oid

LEFT JOIN `ultracart_dw.uc_orders` o on o.order_id = suoe.order_id

where suoe.order_id is not null

order by order_id, event_dts

)

select * From order_upsell_rows where order_dts >= DATE_SUB(CURRENT_DATE(), interval 1 year)